Nvidia AI Can Generate Virtual Worlds From Videos

Many of you reading this are probably taking a very short break from playing Red Dead Redemption 2. Creating those virtual worlds gamers love exploring isn’t easy. It can take tens of thousands of hours along with a good amount of money. And often those worlds aren’t as realistic as players were hoping.

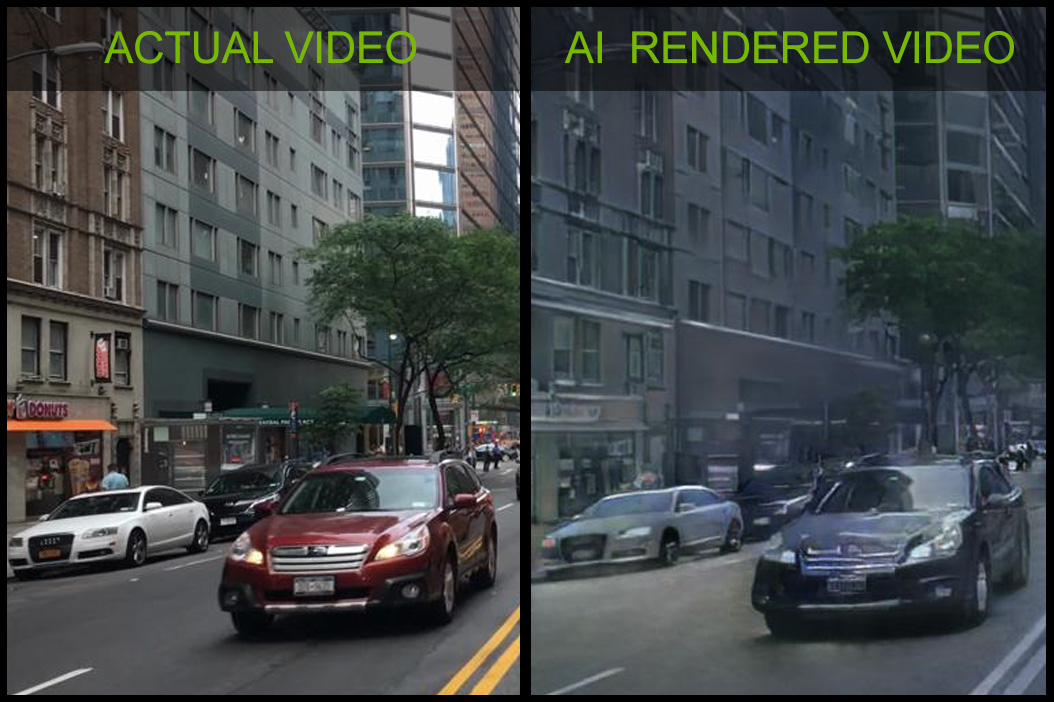

To help cut down on cost and time in creating those gaming worlds, Nvidia is looking to use artificial intelligence. They’ve created a neural network that can generate realistic and interactive virtual environments from simply watching real-life videos. The neural or ‘deep’ network is loosely based on the human brain. This is the first time they’ve combined machine learning with computer graphics to do image generation.

Nvidia AI Was Trained With Videos of Cars Driving Through Cities

The neural network was trained with videos of cars driving through different cities. The researchers then extracted things such as cars, trees, and buildings, and used this information to teach the Nvidia AI how to recognize specific objects.

According to the company, the network operates on high-level descriptions of a scene, for example, segmentation maps or edge maps, that describe objects locations and their general characteristics, such as whether a particular part of the image contains a car or a building, or where the edges of an object are.

The artificial intelligence then fills in the rest of the details based on what it learned from being trained on the real-life videos. It’s basically the same thing human brains do. We don’t see every element of a scene but rather our brain helps fill in the gaps with what we’ve learned and experienced.

The neural network is able to model the appearance of the world including things like lighting, materials, and dynamics. Since the world is completely artificially generated. a scene can be easily modified to remove, edit, or add objects.

Nvidia Demo on Display in Montreal

The tech is currently on display at the NeurIPS conference in Montreal, Canada, The company’s research team created a simple driving game which allows attendees to navigate an AI-generated environment.

While the research is still in its early stages, it’s quite promising that this tech could make it much easier and cheaper create virtual environments for all types of applications.

For instance, Rockstar might want the next Grand Theft Auto game to take place in London. Instead of meticulously researching and designing every detail, it can simply feed Nvidia’s AI video of the city. This would at least create a base for game designers to tweak and modify saving countless hours.

“The capability to model and recreate the dynamics of our visual world is essential to building intelligent agents,” said researchers “Apart from purely scientific interests, learning to synthesize continuous visual experiences has a wide range of applications in computer vision, robotics, and computer graphics,”

Check out our articles on AI spotting angry customers and changing how your food tastes.