The Future of Speech Recognition Technology

The future of speech recognition technology

Speech recognition technology has become the focus of many tech giants in the last year, the decades-old technology gaining rapidly in importance. It may surprise you to learn that computers have possessed the ability to recognize voice commands all the way back since the 1990’s. But these capabilities have never quite lived up to their potential. Now, however, thanks in part to the rapid improvement of AI and cloud data, interfaces that are truly conversational are becoming actualized possibilities. The future of speech recognition technology is becoming brighter and brighter.

Voice services including Microsoft’s Cortana, Apple’s Sri, Google’s Assistant and Amazon’s Alexa are growing increasingly popular, making conversational interfaces top priorities with tech giants. These applications strive to achieve complex interactions using only the human voice, without the need for a keyboard. Tech giants’ regenerated focus on technology related to voice processing is bound to spread voice interfaces to other industries as well.

Google reported in a conference in 2017 that they had reduced their Word Error Rate to 4.5% from 8.9% in just a one-year period by the use of deep learning algorithms. These kinds of improvements bode well for the future of voice interfaces, bringing good news to industries that stand to gain from improving voice interactions.

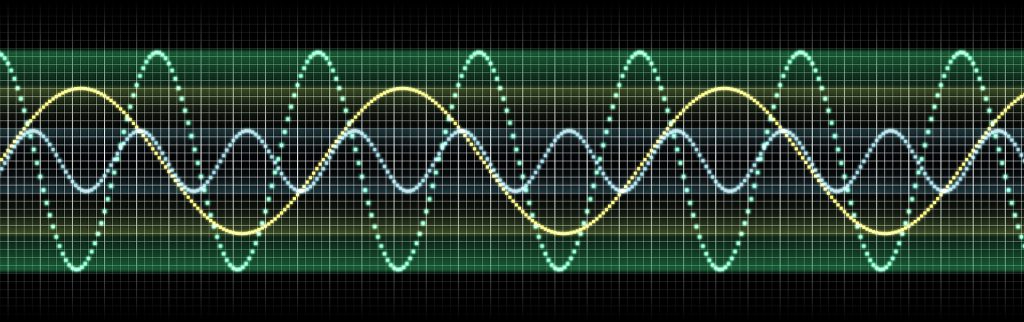

Recognizing the nuances of the voice

Speech recognition is expected to get more and more sophisticated as we look to the future. The next step once basic content is locked down is expected to be an analysis of the textures of the voice utterances. There will come a time, possibly in the next 5-10 years, when algorithms will not only easily understand what is being said, but also the way it is being said. They will be able to pick up on tonal inflection and the various other characteristics that add to the meaning of the spoken word, making such nuances a part of their processes of comprehension. They may, therefore, be able to use the voice to gauge the speaker’s mood, their level of distress, their strength of conviction, using this data to create a fitting response, being able to assess security risks, judge the speaker’s mental state and so on. Voice recognition may ultimately be able to recognize things like sarcasm, and tailor their response to suit the speaker’s tone.

Screen-liberation

Speech recognition is predicted to liberate people from their intense relationships with their screens. Speech recognition capability is expected to provide services like sending emails prompted by voice instructions, providing recipes while cooking, advising users on the best travel routes available to them and so on, creating experiences with technology that are genuinely hands-free.

Predictions for the future

It is estimated that by 2020, voice searches will comprise half of all searches. It is further estimated that by the same year, 30% of all searches are going to be done without the use of a screen. The number of US smart speakers in 2020 is expected to rise to 21.4 million. The speech recognition market is expected to be worth $601 million by 2019.

Check out some other industries integrating speech recognition.

Speech Recognition in Grocery Shopping Speech Recognition in Cars

Speech Recognition in Customer Service Speech Recognition in the Travel Industry